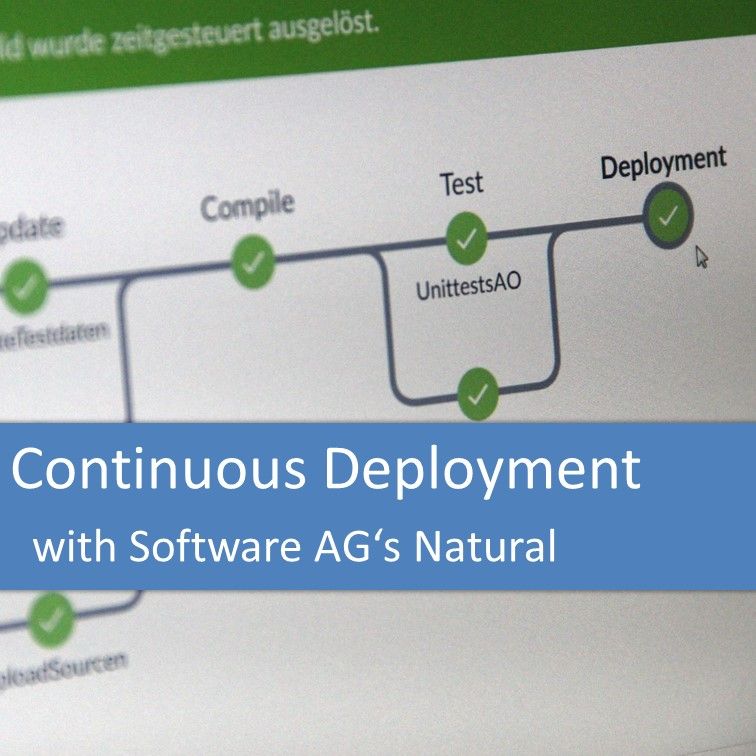

Continuous Deployment with Natural – Legacy Coder Podcast #6

After you have automated the build process for your application based on Software AG’s Adabas and Natural it’s time to take the next step and also deploy the changes to production after each push to Git! I’ll tell you how in the sixth episode of the Legacy Coder Podcast. Recap: Automating your build process with … Read more