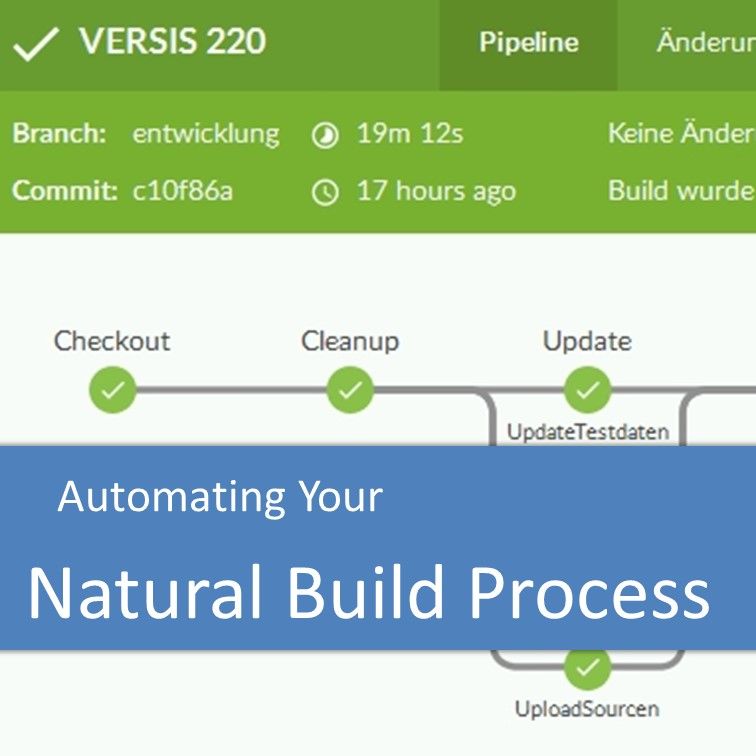

Automating Your Natural Build Process – Legacy Coder Podcast #2

How can you automate the build process for your application based on Software AG’s Adabas and Natural? I talk about our journey towards a completely automated build from scratch after each push to Git in the second episode of the Legacy Coder Podcast. How to automate the build process for an Adabas/Natural application Why would … Read more